GPT-3 for dummies. How the most advanced neural network in the world works.

If you haven’t been paying attention to the amazing things AI can do, now is the time to do so.

Users on the net are going crazy for the GPT-3 interactive tool. Its theoretical possibilities and ways of using it are amazing and incredibly versatile. (G)enerative (P)retrained (M)odel 3 is the third generation of OpenAI’s natural language processing algorithm. He will become your real friend and helper, because he will behave exactly as you tell him.

If you want to look into the future, take a look at how developers are already using GPT-3. Without a doubt, it should be said, our world is full of examples of the use of AI, from special offers for buying on Amazon to self-driving cars. AI managed to put its hand everywhere. Moreover, there is a good chance that it was AI that inspired you to read this article, and other technologies were involved in writing it.

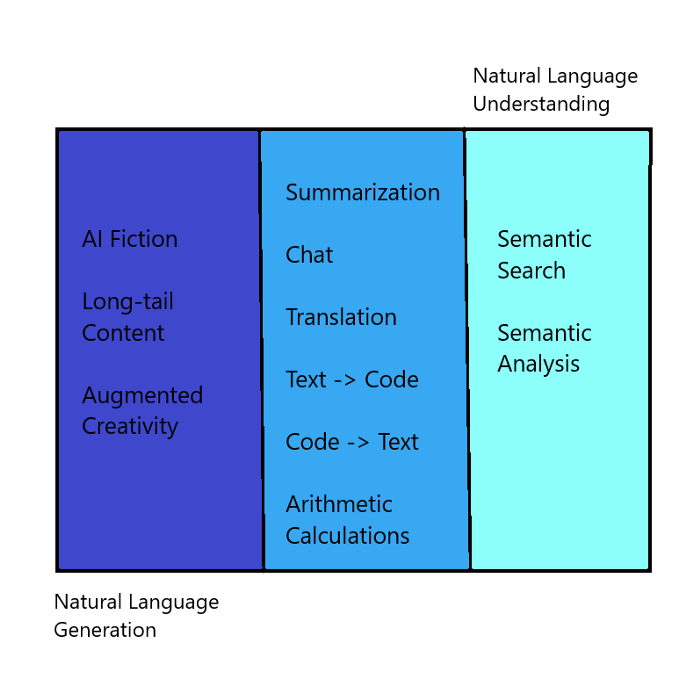

GPT-3 is a special algorithm that can perform various human speech processing tasks. He can act as a writer, journalist, poet, author, researcher or bot. GPT-3 is also considered by researchers as an algorithm with which we can take the first step towards creating Artificial General Intelligence (AGI). AGI is the ability of machines to learn and perform tasks similar to those performed by humans.

There was a time when this algorithm was a real mystery to scientists. However, as technology has developed, it has become more and more accessible to both professionals and ordinary users. To understand the basics of how GPT-3 works, we must become familiar with the principles of machine learning, which is the key to how this unique algorithm works. Machine learning is an integral part of artificial intelligence. It allows the machine to improve itself with the experience gained.

There are two types of machine learning algorithms: supervised and unsupervised.

Supervised learning includes algorithms that need labeled data. In other words, let’s say your car is a 5 year old. You want to teach her how to read and then check if the skill has been learned. In supervised learning, we provide labeled data to the machine. After that, we check whether she was able to learn something or not.

People, of course, acquire skills during supervised learning. However, most of their time they learn by analyzing their experiences and trying to predict the future with their intuition. This is precisely the principles of unsupervised learning.

Supervised VS Unsupervised Learning

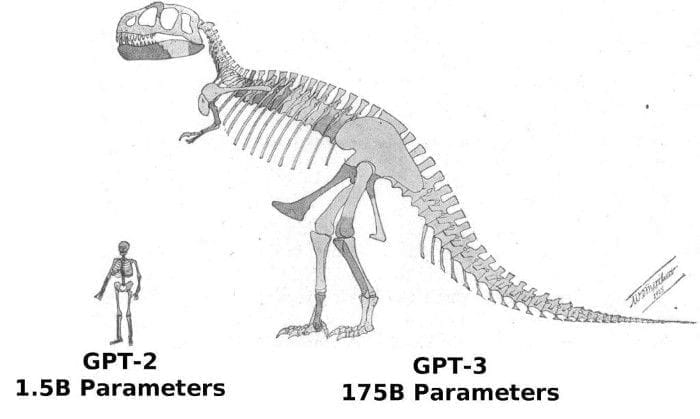

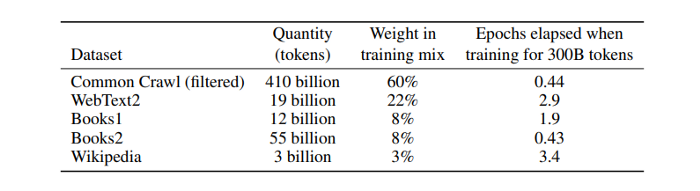

GPT-3 uses unsupervised learning. It is capable of meta-learning, that is, learning without any training. The GPT-3 training corpus consists of a common-crawl dataset. It includes 45 TB of text data obtained from the Internet. GPT-3 has 175 billion model parameters, while the human brain has 10-100 trillion.

To understand the principles of life on Earth, the scale of the universe and other human subtleties, it is necessary to have 4.398 trillion model parameters. The pace at which GPT is growing is terrifying – it’s increasing by about 100 times every year, which is both surprising and worrisome.

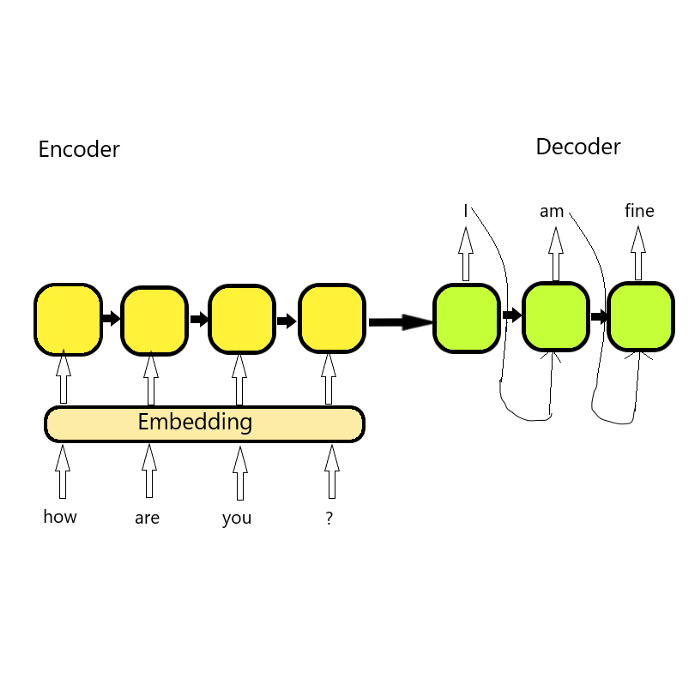

We know that machine learning models always work with high accuracy. However, the analysis of human language is their weak point. To overcome this shortcoming, we convert text to numbers using embedding and pass them to our machines. Machines use encoders and decoders to help them recognize the message.

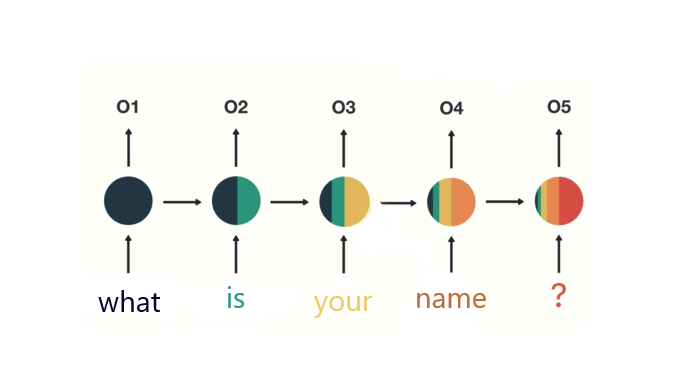

Recurrent Neural Networks (RNNs) are a class of neural networks that are good for modeling sequential data such as time series or natural language. However, due to the huge amount of textual information, we cannot be sure that the message will be read in a thorough manner. For better recognition of the sent message, we use the attention mechanism. It works in exactly the same way as our brain. When we save certain information in it, it filters out the important data and removes the unnecessary ones. The attention mechanism helps keep count of each attachment. The data importance rating makes it possible to filter out irrelevant information.

How RNS work

When we send our text data to the machine, it goes through the encoder and vectors are created. The resulting vectors are further analyzed by the attention mechanism. This mixed process helps predict the next word a person will say. Simply put, there is " filling in the gaps " based on how confident the machine is in its knowledge. As it acquires language, the machine can better predict the next words. Having vast experience, she will accurately predict possible options. Next, we send the received word with the available prediction to the decoder. The cycle of generating new proposals continues indefinitely, which helps to improve AGI.

The main highlight of GPT-3 is its huge data corpus and endless learning opportunities. Learning itself is not domain-specific, but allows the algorithm to learn how to perform any domain-specific task. Ask the algorithm to write a SQL query and it will do the trick. Ask him to help write a text on a sports topic – he can also do this.

Source: GPT-3 Paper

GPT-3 works better with multiple training frames: when you provide it with a hint and some examples. Imagine that you gave a couple of books to a freshman and asked her to answer questions. Sometimes she succeeded, and sometimes she failed. So you keep giving her more books and asking more questions, which helps her improve her knowledge. She does this by reading new material and looking at examples of similar questions.

GPT-3 is still a machine, it will be able to learn from many examples. When we learn to drive a car, we choose a huge number of places to hone our skills. We start with roads where a small number of cars drive. Then we go to the tracks with more dense car traffic. And finally, having gained complete self-confidence, we drive along the busiest street in order to acquire the skill we need. If you drive on roads with only moderate traffic, then you will definitely not be able to drive a car during rush hour. In the same way, if we want to get the best model, we need to provide it with different training conditions.

GPT-3 is constantly learning. It is the best algorithm in the field of AI. However, GPT-3 cannot behave exactly like we do. After all, kids don’t need to see millions of examples to learn something new. GPT-3 learns on the Internet and sometimes absorbs all the negativity that is there. It can mimic natural language, but when it comes to natural thoughts, AI still falls short. There is a fine line between natural language and natural thought. GPT-3 proved that scaling the language model can improve the accuracy of statements. To use a language identical to a human, a machine does not need a soul, but a huge amount of data.

When I was a child, our teacher gave us the first part of the script and asked us to write a sequel. I spent hours inventing plot twists and then bringing them to my teacher. Every time I got low marks and did not understand why.

For many years I continued to do this and still did not understand the reason. However, one day it dawned on me that I was doing everything wrong. I was too busy focusing on my creative thoughts, but all it took to get a good grade was the absence of grammatical errors. Apparently, he taught us to "write" and not "think creatively." Still, I would like to admit that grammar was not my strong point, unlike creating an interesting plot.

This is exactly what GPT-3 does. He draws much of his knowledge from previous works created by humans. And all he cares about is the creation of similar human thoughts and words. It focuses on " style " rather than " creativity or understanding causes ". Grammar is his programming language.

It cannot be denied that he is good at predicting possible word choices. However, GPT-3 is neither designed to store facts nor to retrieve them at the right time, as the human brain does. It is more like an algorithm for selecting keywords from a template, as is the case with SEO.

Such a machine (even after meeting people through the Internet) lacks human features. It’s just an algorithm and it’s unreasonable to expect it to be able to " voice " its private thoughts. He still lacks IQ, which is what separates humans from machines. I hope it stays that way (at least for a while).

Sourced from Towards AI.

Cover image: Kasia Bojanowska